Biography

Chen-Yu is a Ph.D. candidate at KAUST expecting to graduate in Spring 2023. He started his M.S./Ph.D. in fall, 2018.

His research focuses in tackling the network communication bottleneck of distributed deep learning training workloads.

During his time at Academia Sinica, Taiwan, Chen-Yu worked on techniques for digitalizing handwriting and ancient Chinese calligraphy.

- Distributed Deep Learning Training Systems

-

Ph.D. in Computer Science, 2023

King Abdullah University of Science and Technology

-

M.S. in Computer Science, 2019

King Abdullah University of Science and Technology

-

B.S. in Engineering Science and Ocean Engineering, 2016

National Taiwan University

Recent Publications

Projects

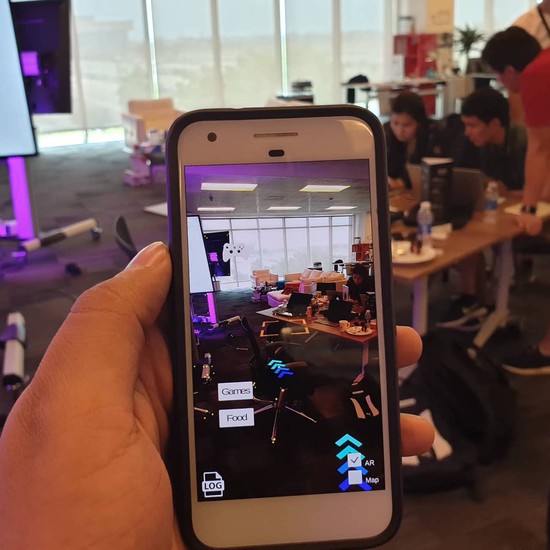

AR-Navigator

JUNCTIONxKAUST 2018 second prize. Integrate Augmented Reality to indoor navigation.

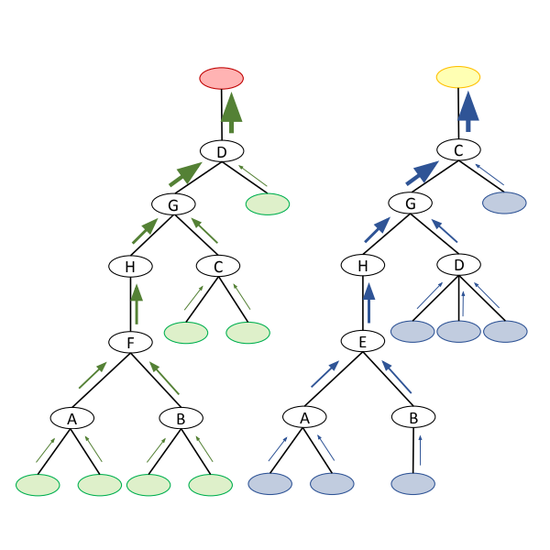

Daiet

DAIET performs data aggregation along network paths using programmable network devices to alleviate communication bottlenecks in distributed machine learning systems

Shaheen Supercomputer Evaluation

Evaluate different processors architectures and programming environment and to reach the technical specifications provided by the chip manufacturers

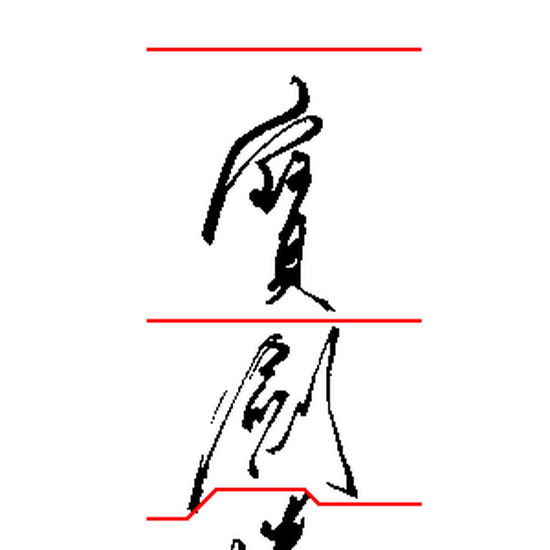

Chinese Character Extraction

Extract handwritten Chinese characters from manuscripts

handwriting.js

A simple API for the incredible handwriting recognition of Google IME

Font Embedding

efficiently embed Chinese fonts to webpages

Resume

Last Update: January 2023